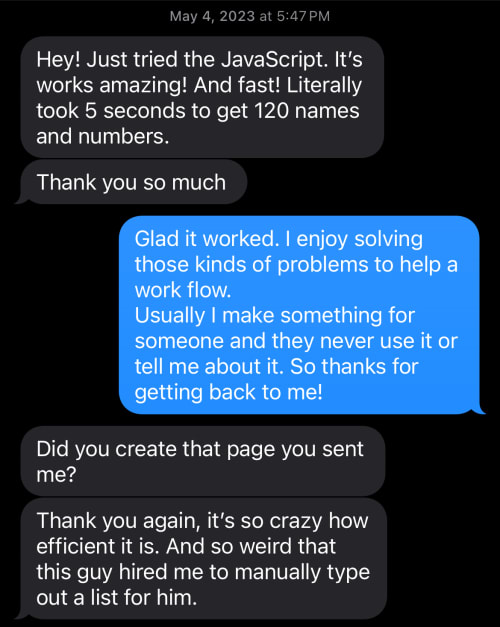

An issue was brought to me where someone needed to get a list of businesses from Google Maps. They wanted to get the business name and phone number. This was a task they had at their job. Why? I do not know. But it was tedious, monotonous, laborious, and boring. They sought me out to solve that problem for them.

Below are the instructions I created for them. But first, here is the texts we had about it and how much it helped. I ended up making this one of my listed projects as well.

💡 Disclaimer:

Web Scraping is when you take webpages that you don’t own and go through the HTML code and pull out the data on the page you want. Because this isn’t always desired by the website it isn’t guaranteed to work and may require consistent updates to the scraping process for it to keep working.

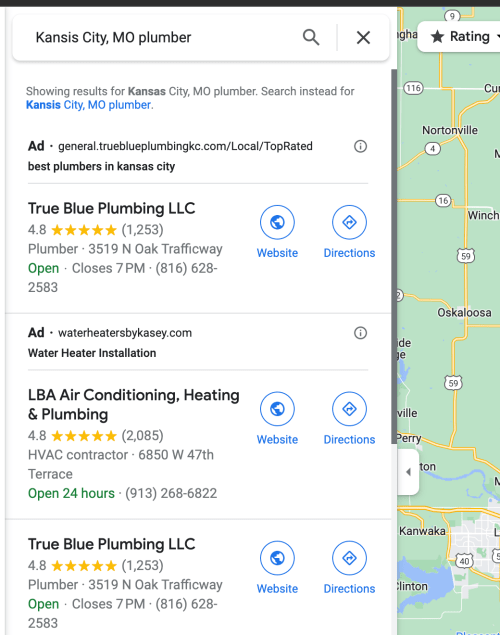

1. Google Search

Go to Google Maps and do a search for the city, state and type of business you want. The intention is to get the businesses feed to show up with a list.

Search in the form of City, ST business type. Example: Kansas City, MO plumber

Issues To Solve

Here is where you could manually scroll through the list and copy and paste thing one by one. To do this programmatically we have to look for patterns, which Google does not make easy. They intentionally and sometimes unintentionally have created HTML that is difficult to predict.

Note that not every business has the same data which changes their code. Some have reviews and some don’t. Some are open and some are not. This can cause some of the data we scrape to be wrong. Keep that in mind when reviewing the data.

Note as well that the list is not fully shown. When scrolling down to the end of the list it will show a loading spinner as it requests the next group of businesses to add to the list. To get ALL business we’ll have to tell Google to load them all by scrolling down. But doing this too fast will trigger Google to think we’re a bot (which we are when scraping data) so we have to slow down how we scroll to trick Google to think we’re human.

2. Open the JavaScript Console

To run JavaScript in your browser and pull this data out you need to open the Developer Tools and the JavaScript console. On Mac using Chrome you can do this quickly with Command+Option+J. Alternatively you can click on the Menu dropdown for View, go to the bottom for Developer and then click on JavaScript Console.

3. Paste In the Code

Before you paste in the code know what will happen when you submit it.

- You’ll see the business list scroll and pause multiple times. It’s doing this to trigger Google to load all the businesses. It’s a guess on how many they will show and how long it will take. At most this will take 50 seconds, but usually much less, likely about 5-10 seconds. We have to do this slow so Google will allow it.

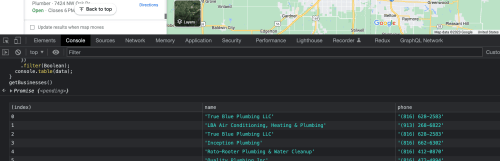

- In the console you’ll see something like

Promise{<pending>}. This is just because it knows it has to wait for something to happen and it hasn’t happened yet. - When all done it will print under the code and the Promise message explained above a table of the data it scraped. The table will show as index, name and phone. Index is just the count, starting at 0. Name is the business name. Phone is hopefully the phone number. This was the trickiest to predict so sometimes it may be wrong. You can manually review any that don’t look right.

Now copy this code and paste it in the console and press ENTER.

async function getBusinesses() {

const feed = document.querySelector('div[role="feed"]');

let count = 0;

while (count < 50) {

count = count + 1;

feed.scrollTo({ top: feed.scrollTop + 2000, behavior: "smooth" });

await new Promise((resolve) => setTimeout(resolve, 1000));

const possibleEndSpan = feed.lastChild.querySelector("div p span");

if (possibleEndSpan?.textContent === "You've reached the end of the list.") {

count = 50;

}

}

const data = [...document.querySelector('div[role="feed"]').childNodes]

.map((node) => {

const el = node.firstChild;

const isBusiness = el && el.ariaLabel && el.role === "article";

const name = el?.ariaLabel;

let phone = null;

if (isBusiness) {

const spans1 = [...el.querySelectorAll(`div div[aria-label="${name}"] div div div div div div div span span[aria-hidden="true"]`)];

const lastSpan = spans1[spans1.length - 1];

if (lastSpan?.parentNode && lastSpan.parentNode.lastChild?.textContent.length === 14) {

phone = lastSpan.parentNode.lastChild?.textContent;

}

if (!phone) {

const spans2 = [...el.querySelectorAll(`div div[aria-label="${name}"] div div div div div div div span span`)];

const lastSpan2 = spans2[spans2.length - 1];

if (lastSpan2?.parentNode && lastSpan2.parentNode.lastChild?.textContent.length === 14) {

phone = lastSpan2.parentNode.lastChild?.textContent;

}

}

return { name, phone };

}

return null;

})

.filter(Boolean);

console.table(data);

}

getBusinesses()

4. Copy and Paste the Table

Now you can do some of the manual work.

- Click and drag from the first row that is index 0, and drag down to the last phone number.

- Copy

- Right-click in your spreadsheet in an empty cell and select to Paste Special, and select Values Only or Command+Shift+V.

Note: It will be pasting in all 3 columns. You can just delete the index column when done. Also, note that for code the names and phone numbers are inside of single quotes. You’ll have to remove them in the spreadsheet when you paste it in. You can do this in mass by using the find and replace in the spreadsheet.

Alternatives

This will work for awhile until Google changes their site. If this was important enough and worth it, there are some other ways to do this.

- Google has a specific API that some code can access and get all this data directly, without scraping their website. This is what Google wants you to use instead of scraping. However there is a cost behind it. Not a lot, cents per request, like 1 cent or even less depending. But you’d have to make the account, give it credit card data and when have someone write the code to make the requests. (This is what you see on websites sometimes when they have Google Maps).

- Found that some developers have made specific tools to do this. Local Scraper, Bright Data, etc. They cost money of course and seem to be for larger organizations.

- There are some programming libraries made that these scrapers use, like Puppeteer. What they can do is be programmed to go on the web for you invisibly go to any page as if you were clicking on things and typing things in and do everything, even returning all the data they scrape. And they can be run on a server/computer in the background on a schedule to get updates for anything that changes from day to day. All here have free ways of doing it but would require a lot of coding, and updating as websites change and may not be worth the effort for the return of how much you get paid to do it.